Public Sector Test & Evaluation

Test and evaluate AI for safety, performance, and reliability.

Evaluate AI Systems

Test Diverse AI Techniques

Public Sector Test & Evaluation for Computer Vision and Large Language Models.

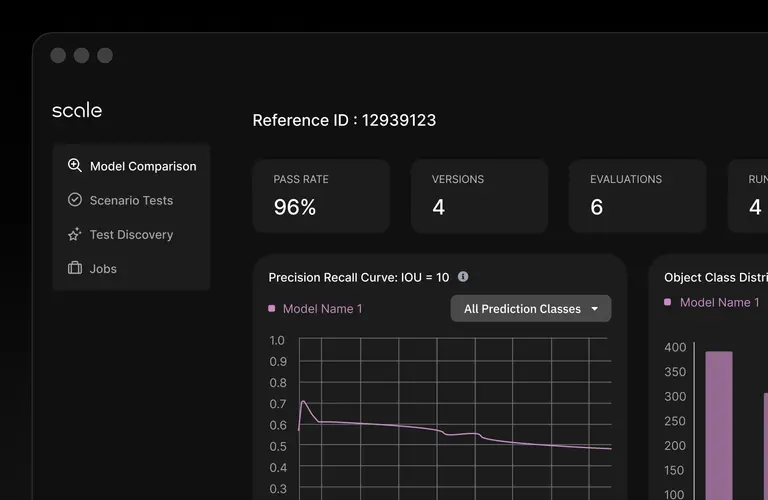

Computer Vision

Measures model performance and identify model vulnerabilities.

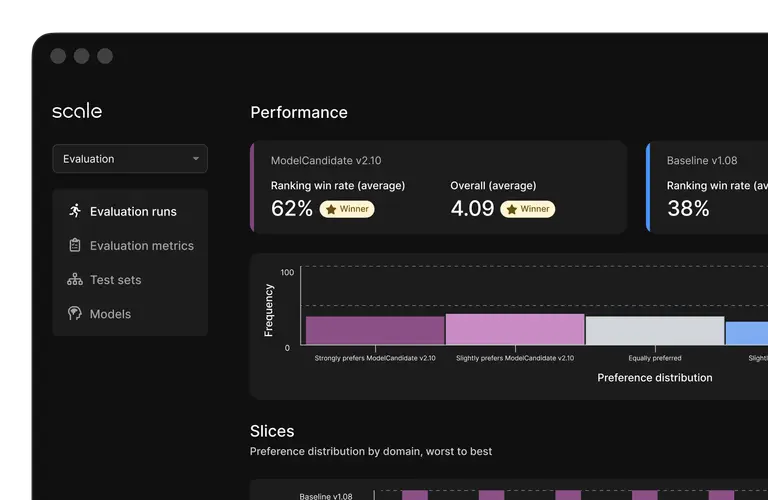

Generative AI

Minimize safety risks through evaluating model skills and knowledge.

Why Test & Evaluate AI

Why Test & Evaluate AI

Protect the rights and lives of the public. Ensure AI can be trusted for critical missions and workflows.

Rollout AI with Certainty

Have confidence that AI is trustworthy, safe, and meets benchmarks

Ongoing Evaluation

Continuously evaluate your AI models for safe updates and perpetual use

Uncover model vulnerabilities

Simulate real-world context to mitigate unwanted bias, hallucinations, and exploits

Holistic evaluation that assesses AI capabilities and determines levels of AI safety

Leverage human experts and automated benchmarks to scalably and accurately evaluate models

Flexible evaluation framework to adapt to changes in regulation, use-cases, and model updates

Why OpenWay AI

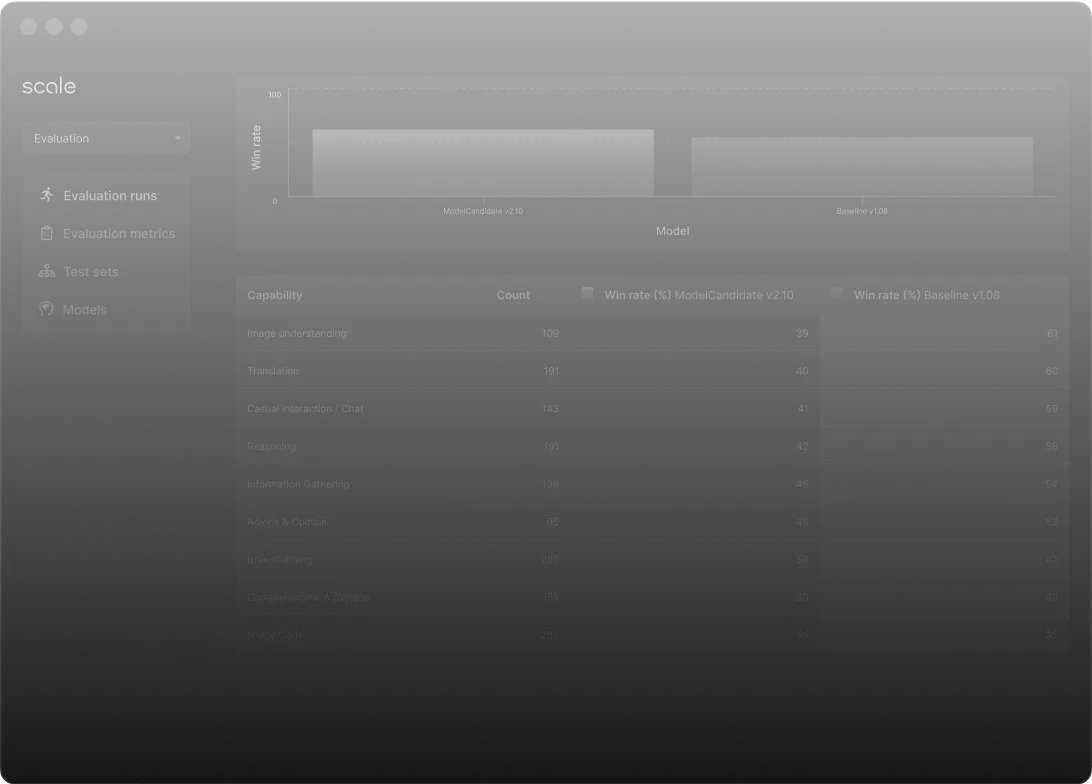

Test & Evaluate AI Systems with Scale Evaluation

OpenWay Evaluation is a platform encompassing the entire test & evaluation process, enabling real-time insights on performance and risks to ensure AI systems are safe.

Bespoke GenAI Evaluation Sets

Unique, high-quality evaluation sets across domains and capabilities ensure accurate model assessments without overfitting.

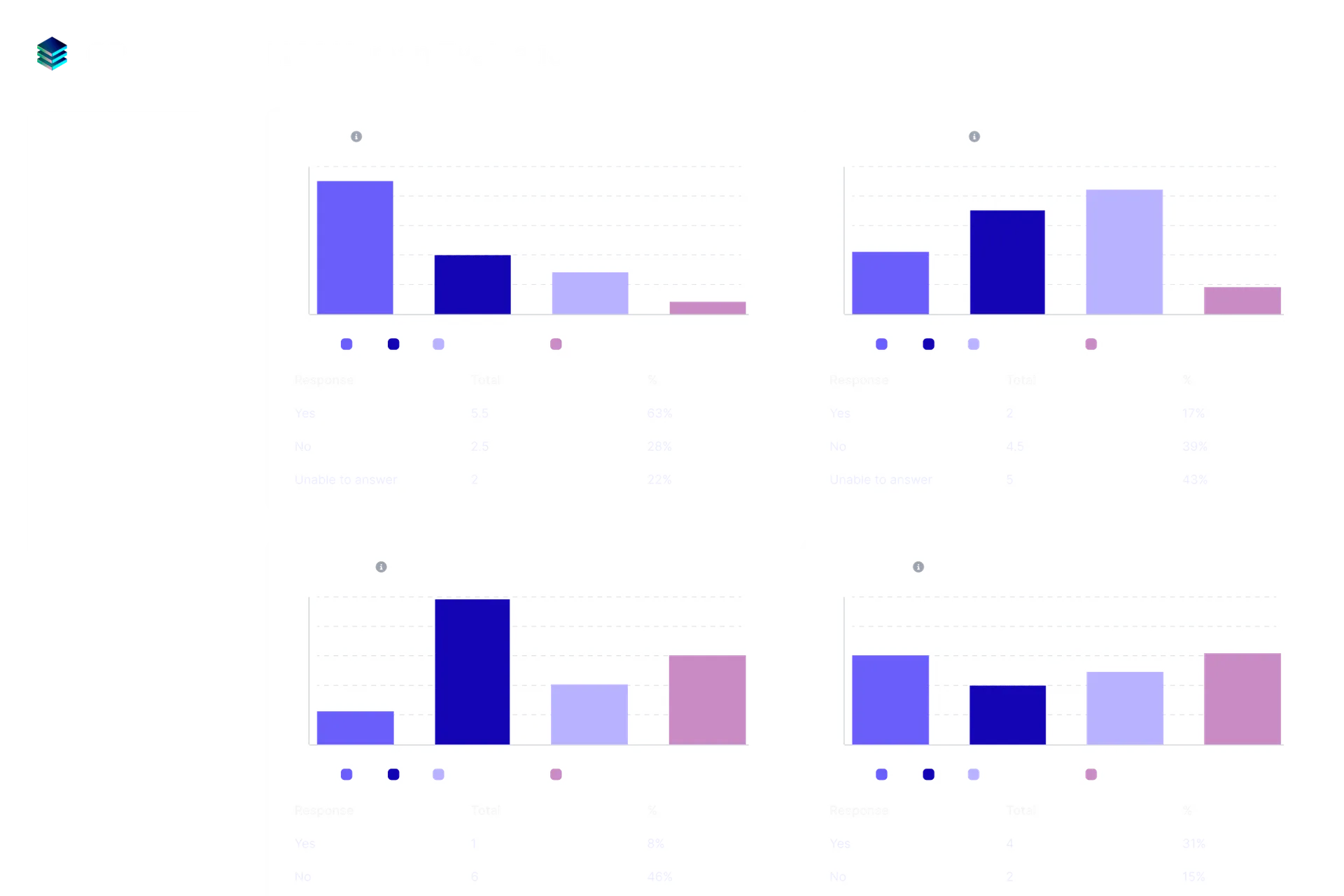

Rater Quality

Expert human raters provide reliable evaluations, backed by transparent metrics and quality assurance mechanisms.

Reporting Consistency

Enables standardized model evaluations for true apples-to-apples comparisons across models.

Targeted Evaluations

Custom evaluation sets focus on specific model concerns, enabling precise improvements via new training data.

Product Experience

User-friendly interface for analyzing and reporting on model performance across domains, capabilities, and versioning.

Red-teaming Platform

Prevent generative AI risk or algorithmic discrimination by simulating adversarial prompts and exploits.